At TVASurg we love making engaging educational videos for teaching surgery. All members of our production team are graduates of the esteemed Biomedical Communications program at the University of Toronto–Mississauga, where we received focused training on communicating topics in life science with the same techniques used in the 3D animation industry. These include modelling, rigging, shading, texturing, animation, lighting, rendering and compositing. In previous posts we discussed 3D modelling and 3D animation, and this month we’ll take a look at what makes our animations really shine (literally!)–3D rendering.

3D rendering is the final phase of 3D animation, where you export your animation scene to still images or video files that can then be read by compositing software to create the final video file. There’s a lot you do to make the image look as good as possible in 3D animation software, but compositing software allows you to do even more to improve the look with colour adjustments and other post effects like depth blur and allows you to combine the animation shots with footage, audio and text elements. You can also greatly increase the flexibility of making changes to an animation by dividing it up into shots, rendering to image sequences, and using render passes. We’ll cover compositing in a future blog post, but first, we need the raw assets used in compositing–our renders.

There are a few key things that produce the beautiful renders in 3D workflows: 1) the models, lights, and cameras in your 3D scene, 2) the “shaders” or materials applied to the models and their settings, which may or may not include texture maps, and 3) the render settings of the scene, which are dictated by the render engine you are using.

MODELS

Models are where the render begins, you need something to see in the render after all! In the modelling phase, we have to be mindful of how the geometry of the 3D model is arranged to give the proper results when we go to render. There are three important considerations we have to attend to: surface topology, UVs, and polygon “normals”.

A model’s surface topology is something we covered in our 3D modelling blog, and essentially this is the arrangement of vertex points, the edges that connect those points, and the triangles or polygons that those edges describe.

![[image for model surface] [image for model surface]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_models2.jpg)

In 3D rendering, the software will have to calculate what color and brightness value to input into each pixel in the final rendered image. Imagine placing a flat screen or window between the viewer and an object, much the same way artists in the European Renaissance would set up a grid made of strings to break apart a scene they were looking at into smaller, more manageable squares. In computer graphics software, this is a necessary part of the image-making process. When the viewer (or in this case, the render engine) looks through each of those squares in the grid (in the case of 3D rendering, each of these would be a pixel) a set of numbers will be determined that act as a code for the amount of red, green, and blue that contribute to the final colour.

So how does this relate to the model’s surface topology we just mentioned? 3D render engines use a technique called “ray tracing” to calculate the colour of each pixel in the final rendered image based on the angle at which each polygon of the model’s surface is facing the 3D scene camera and each of the 3D scene lights that are hitting it. Ray tracing actually starts at the endpoint, the camera, and traces backward from the camera lens to the light source.

![[image for ray tracing] [image for ray tracing]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_rayPath.jpg)

Each polygon also has a surface “normal”, which is essentially the direction considered its “front”. If the normals are flipped, or not synchronized across a model’s surface, it may appear inside-out or appear to have holes.

![[image for polygon normals] [image for polygon normals]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_polygonNormals.jpg)

Since each polygon is situated in 3D space consisting of XYZ coordinates, we need another set of values to describe the width and height of each polygon independent of its 3D orientation, and for those, we use “U” and “V”. If we take the entire mesh of polygons that make up a 3D model and lay them flat, we get the UV map for the model. This is absolutely critical for texture mapping, where we project a 2D flat image onto a 3D model.

![[image for UV map] [image for UV map]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_UVmap2.jpg)

The calculations a render engine has to compute take into account the attributes of the camera, such as its XYZ position in 3D space and properties of the lens like focal length, the features of each polygon from the 3D mesh each ray makes contact with, which includes the angle the polygon is facing and it’s shader attributes such as base colour, reflection strength and fall off, and any information provided by texture maps, and the attributes of the scene light objects, most notably their brightness setting(s), spread/radius, and falloff. That’s a lot to calculate!

LIGHTS

Just like a professional photo or video shoot in the physical world, we need to add lights to our scene to determine the direction of shadows, control the areas of greatest illumination, and dictate how the forms and volumes of our scene will be perceived by the viewer. In 3D rendering software we have incredible control over the lighting in a scene. Every light object has settings for intensity, falloff, and colour temperature, just to name a few.

![[image for 3D lights] [image for 3D lights]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_light-types_labels.jpg)

There are several different light objects we can make use of. Point lights emanate illumination in all directions from a single point in 3D space, which unlike the real world, can truly be a single point light source, there’s no wires or support structures required--it’s just floating in space. Spot lights are closer to what we encounter in real life when we have a flashlight, the light has a direction and a defined circumference of illumination. The edges of this “spot” of light might be sharp or have a soft falloff, sometimes referred to as the “penumbra” of the unilluminated shadow. In 3D software we can control this gradient of light at the edge by using slider controls in the light object’s attribute settings.

![[image for light attribute settings] [image for light attribute settings]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_light-settings2.jpg)

Area lights are similar to spotlights in that they have a defined direction and will cast light onto a finite area. Their shape, spread and intensity can all be controlled--we can even add a texture to the light! Lastly we have directional lights, sky objects and dome lights, all variations on the same theme, which is light that covers your entire 3D scene so that any object in it will receive illumination.

You can add a lot of visual interest to a scene by adding a panoramic photo shot with a high dynamic range (HDRI) as the texture map of the sky or dome light--this will illuminate the scene based on the illumination of the image. The image itself will be reflected on the models and show up in the reflection passes for your renders. This powerful 3D rendering effect must be wielded carefully though, you don’t want the reflections of an English countryside reflected on anatomy that’s supposed to be describing a surgical scene--that would really throw off your audience.

![[image for HDRI dome] [image for HDRI dome]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_HDRdome-render4.jpg)

CAMERAS

There is always at least one camera object present in a 3D scene. Whenever we are navigating our 3D scene by rotating or zooming our vantage point, we are doing so by using a default scene camera, but we can and do add more cameras to save their positions and animate between various vantage points as we tell our story.

![[image for 3D cameras] [image for 3D cameras]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_spot-light-and-camera2.jpg)

Assigning different cameras to discrete sets of movements helps us keep track of what we’re showing and for what purpose. An animation is like any other story that has a beginning, middle and end. Along the way we divide up that story further into chapters, and in 3D animation, we break down chapters into sequences, sequences are broken up into shots, and shots may even have several different takes. In our 3D scene file we can create multiple cameras to capture discrete shots, and assign these cameras to a specific set of models and rendering conditions using a built-in organizational feature called the “Take System” in Cinema4D. Other software options like Autodesk Maya also have similar systems for organizing multiple shots all dedicated to one project.

![[image for scene setup] [image for scene setup]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_renderSettings-output.jpg)

SHADERS

Each model (a continuous piece of geometry) in a 3D scene will have to have a material applied to it for rendering. This terminology can get a bit confusing because there are several that are often used interchangeably: shader, material, texture, substance, surface, etc., but essentially we are talking about a collection of settings for describing the surface appearance of the model.

![[image for 3D shaders] [image for 3D shaders]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_shadersC4D.png)

Shown here is an array of different shaders we’ve made and modified over the years at TVASurg. In some cases, we can re-use shaders to save time, but sometimes a new case will have new anatomy or tools that call for custom shaders, or there may be necessary adjustments to update the shaders for a cohesive aesthetic.

A “material” is the parent object in this hierarchy and is previewed with a sphere icon in our Cinema 4D software in the Material manager, giving an idea of what kind of surface appearance the material provides. A shader is one of several components that make up a material, though sometimes a material is really only one main shader doing the work, hence the terms being used interchangeably.

With the types of anatomical structures we generally show in the surgical specialties we’ve featured on our Atlas, we’ve made extensive use of a 3D shading method called “procedural shaders”. A procedural shader uses math to create seamless fractal-like patterns that can be applied across the surface of a 3D model without needing to rely on the UV information, so that allows us to skip that step of the texturing process most of the time. There’s a lot you can do with repeating patterns in different components of the 3D material. These sub-shaders, called “channels” in Cinema 4D materials, control aspects of a surface appearance such as the base colour, the reflection, or the surface texture.

TEXTURE MAPS

Most people are familiar with the word “texture” to describe the degree of roughness or softness of an object’s surface, but in 3D rendering workflows “texture” means something very specific, which is a flat, 2D representation of how a surface will appear, which is then wrapped around the 3D mesh of a model. A texture map is a 2D image for surface appearance that has pre-determined coordinates for a specific piece of geometry and how its 3D polygons are laid out in a customized way, called its UV coordinates. A model’s UVs are how the 3D surface relates to being represented as a flat 2D image. The 3D sculpting software Pixologic ZBrush has a built-in feature that allows us to animate this relationship, which is immensely helpful in understanding this concept.

By painting our models and saving out the texture maps, we can add details that would otherwise not be possible, or would be very difficult to reproduce with the procedural shading method we described above.

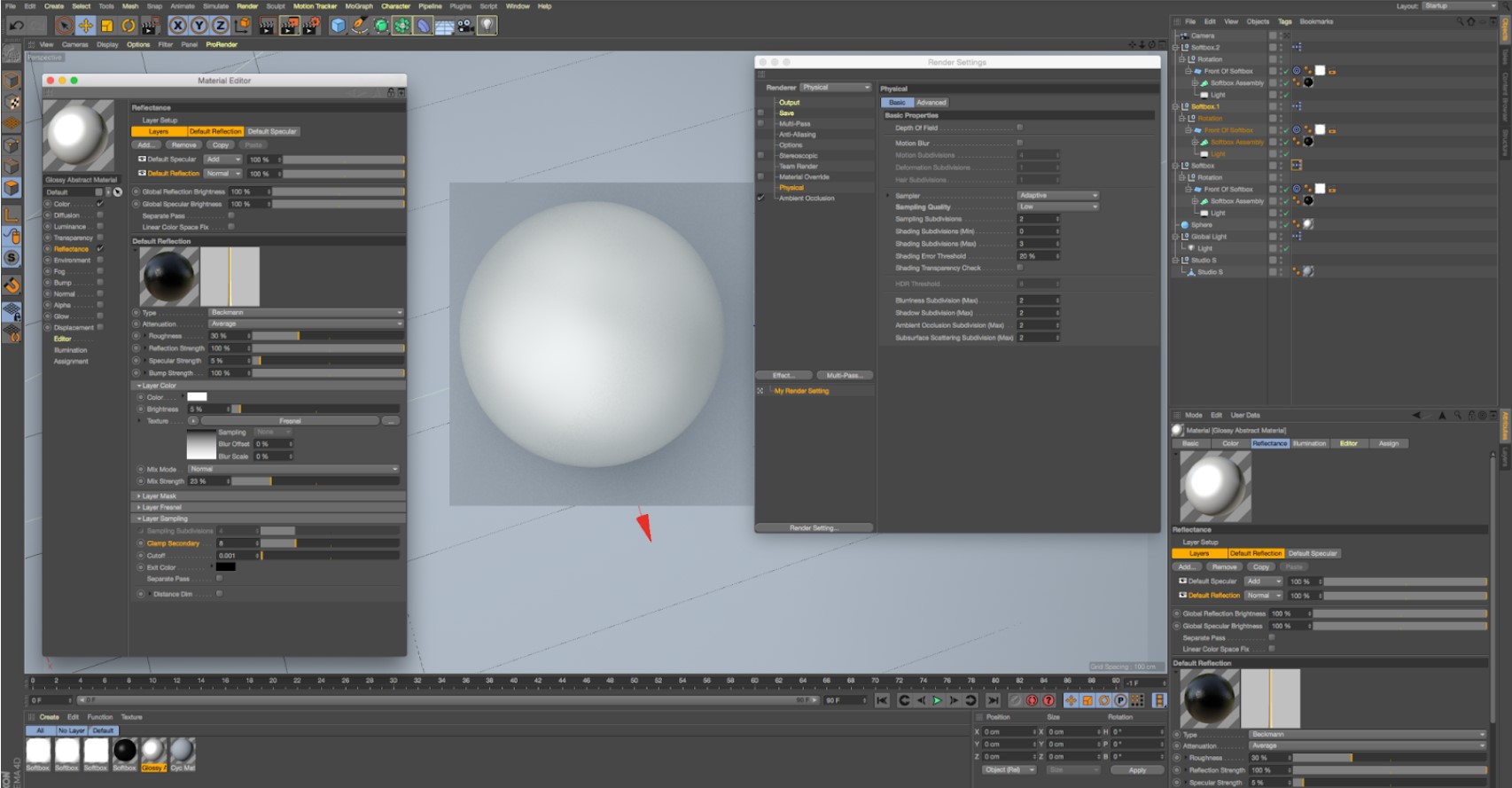

RENDER SETTINGS

The settings you use for your renders will have a big impact on the image quality, most noticeably in terms of the degree of pixelation you encounter. Jagged edges of objects can be cleaned up using anti-aliasing settings, and graininess that dances across surfaces is usually cleaned up by increasing the “samples”.

In our render settings we’ll set the save location for our render sequences, the file type and frame range. We can also separate out different aspects of the render into what we call “render passes” which provides greater flexibility for editing in post. With the brightest parts of the image separated into a specular light pass, and the darkest shadows in deep crevices separated into an ambient occlusion pass, we can adjust these values in compositing software to greatly refine the image and even change these values over time so that the renderer image sequence changes in appearance as objects in the scene are animated.

Certain rendering techniques have been developed to increase realism by using physically-based lighting maths, which result in accurate depictions of light falloff and light bouncing, but these methods can often come at a high cost of render time, so the settings within the render engine need to be carefully managed to eliminate redundant calculations that don’t actually add to render quality.

RENDER ENGINES

Most 3D animation software have at least one built-in render engine, with the option to add 3rd-party render engines as well. The render engine is responsible for running the calculations and producing the final image file. In recent years there have been several 3rd-party render engines that can be used by any of the major 3D animation software, most notably: Arnold, Corona, Octane, Redshift, and VRay. At TVASurg we’ve used the Arnold renderer for some of our recent animations. We’ll get more into specific render engines and settings for cool 3D effects like global illumination in a future post.

We hope you enjoyed this overview of the 3D rendering process for making high-end 3D animations. Keep an eye out for future installments, and if there’s any specific part of our production process you’d like to learn more about, please let us know!

–TVASurg Team

![[image for render settings] [image for render settings]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_renderSettings-physical.jpg)

![[image for render settings] [image for render settings]](http://128.100.190.12/TVASurg/wp-content/uploads/2021/10/TVASblog_3Drendering_frameRange.jpg)

Your article is very informative, very well explained about 3D rendering in tvasurg process.

A valuable guide for people interested in 3D rendering advancement.